Problem

You must provide a method to control some or all of the functions of the mobile device, or provide text input, without handling the device.

Most mobiles are centered around audio communications, so can accept Voice Input in at least some ways. Some application frameworks and most web interfaces cannot receive inbound audio, but there are sometimes workarounds, using the voice network. Devices not built around voice communications may not have the appropriate hardware and cannot use this pattern at all.

Solution

Voice Input has for many decades promised to relieve the users of all sorts of systems from executing complex commands in unnatural or distracting ways. Some specialized, expensive products have met these goals in fields like aviation, while desktop computing continues to not meet promises, and not gain wide acceptance.

Mobile is however uniquely positioned to exploit voice as an input and control mechanism, and has uniquely higher demand for such a feature. The ubiquity of the device means many users with low-vision or poor motor function (so poor entry) demand alternative methods of input. Near universal use, and contextual requirements such as safety -- for example to use navigation devices while operating a vehicle -- demand eyes-off, and hands-off control methods.

And lastly, many mobile devices are (or are based on) mobile handsets, so already have speakers, microphones designed for voice quality communications, and voice processing embedded into the device chipset.

Since most mobile devices are now connected, or only are useful when connected to the network, an increasingly useful option is for a remote server to perform all the speech recognition functions. This can even be used for fairly core functions, such as dialing the handset, as long as a network connection is required for the function to be performed anyway. For mobile handsets, the use of the voice channel is especially advantageous as no special effort must be made to gather or encode the input audio.

Variations

Voice Command uses voice to input a limited number of pre-set commands. The commands must be spoken exactly as the device expects, and cannot interpret arbitrary commands. These can be considered akin to Accesskeys, as they are sort of shortcuts to control the device. The command set is generally very large, offering the entire control domain. Often, this is enabled at the OS level, and the entire handset can be used without any button presses. Any other domain can also be used; dialing a mobile handset is also a common use, in which case the entire address book becomes part of the limited command set as well.

Speech to Text or "speech recognition" enables the user to type arbitrary text by talking to the mobile device. Though methods vary widely, and there are limits, generally the user can speak any word, phrase or character and expect it to be recognized with reasonable accuracy.

Note that "voice recognition" implies user dependent input, meaning the user must set up their voice profile before working. User-independent systems are strongly preferred for general use, as they can be employed without setup by the end user. Only build user voice profiles when this would be acceptable to the user, such as when a setup process already exists and is expected.

A detailed discussion of the methods used for recognizing speech is beyond the scope of this book, and is covered in detail in a number of other sources.

Interaction Details

Constantly listening for Voice Input can be a drain on power, and lead to accidental activations. Generally, you will need to provide an activation keypress which either enables the mode (press-and-hold to input commands or text) momentarily, or switches to the Voice Input mode. A common activation sequence is long-press of the speakerphone key.

Other keys or methods will need to be found when there are no suitable hardware keys. Devices whose primary entry method is the screen (touch or pen) may have trouble activating Voice Input without undue effort by those who cannot see the screen.

Consider any active Voice Input session to be entirely centered around eyes-off use, and to require hands-off use whenever possible. Couple the input method with Tones and Voice Readback as the output methods, for a complete audio UI.

When input has been completed, a Voice Readback of the command as interpreted should be stated before it is committed. Offer a brief delay after the readback, so the user may correct or cancel the command. Without further input, the command will be executed.

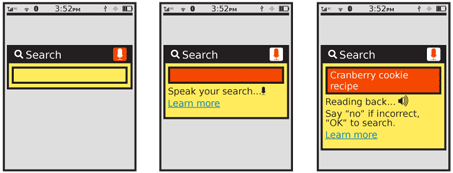

For Speech to Text, this readback phase should happen once the entire field is completed, or when the user requests readback in order to confirm or correct the entry.

You must carefully design the switch between Voice Command, and Speech to Text. Special phrases can be issued to switch to Voice Command during a Speech to Text session. Key presses or gestures may also be used, but may violate key use cases behind the need for a Voice Input system.

Correction generally requires complete re-entry, though it is possible to use IVR style lists, as examples or to allow choosing from list items.

You should consider Voice Command in the same way as a 5-way pad is for a touchscreen device. Include as much interactivity as possible, to offer a complete voice UI. When controlling the device OS, all the basic functions must be able to be performed, by offering controls such as Directional Entry and the ability to activate menus. This also may mean that a complete scroll-and-select style focus assignment system is required, even for devices that otherwise rely purely on touch or pen input.

Provide an easy method to abandon the Voice Input function, and return to keyboard or touch screen entry, without abandoning the entire current process. The best method for this will reverse the command used to enter the mode, such as the press-and-hold speakerphone key.

Presentation Details

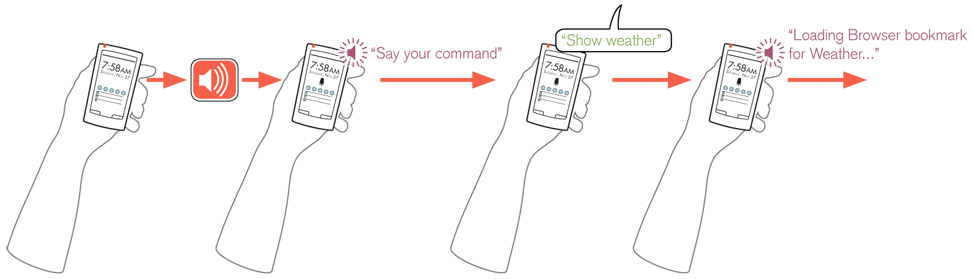

Whenever the Voice Input mode becomes active, you should make it announce this in the audio channel with a brief Tone. When this is rarely used, for first-time users or whenever it may be confusing, a Voice Readback of the condition (e.g. "Say a command") may be used instead. After this announcement is completed, the system accepts input.

All Voice Input should have a visual component, to support glancing at the device, or completing the task by switching to a hands-on/eyes-on mode. When in the Voice Input mode, this should be clearly denoted at all times. The indicator may be very similar to an Input Method Indicator, or may actually reside in the same location, especially when in Speech-to-Text modes.

Hints should be provided on-screen to activate Voice Command or Speech to Text modes. Use common shorthand icons, such as a microphone, when possible. For Speech to Text especially, this may be provided as a Mode Switch or adjacent to the input mode switch.

When space provides -- such as a search, which provides text input into a single field -- display on-screen instructions, so first-time users may become accustomed to the functionality of the speech-to-text system. Additional help documentation, including lists of command phrases for Voice Command should also be made available, either in text on screen or as a Voice Readback.

Antipatterns

Audio systems and processing cannot be relied on to be "full duplex," or to hear at full fidelity while speaking. Give decent pauses, so tones and readback output doesn't get in the way of user inputs. Consider extra cognitive loads of users in environments where Voice Input is most needed; they may require much more time to make decisions than in focused environments, so pauses must be longer than for visual interfaces. These timings may not emerge from typical lab studies even, and will require additional insights.

Next: Voice Readback

Discuss & Add

VUI

I wonder sometimes if we made a mistake breaking voice into a couple components. Or, maybe if there should have been a whole section on VUI, and non-screen interfaces (accessibility also?)

Anyway, nice overview of some voice considerations here. Shallow, but codified usefully:

And this is a good overview of speech recognition:

MOTO X Always Listening

Finally, a mainstream product with always-listening voice commands. There is a specific voice activation sequence required, but no more button presses: http://www.neogaf.com/forum/showthread.php?t=622861

Early rumors, some say passive (meaning to me that it uses acoustical and algorithmic tricks) and some say a dedicated low-power chip. We'll see.

Make a new section

Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account.