Problem

You must build interactivity so that a handheld remote device -- or the user alone -- is the best, only or most immediate method to communicate with another, nearby device with display.

Remote Gestures rely on hardware solutions that are not very common. However, the increasing use of sensors and of game systems specifically designed to capture this input may make them very common in the mid term.

Solution

Remote control has been used for centuries as a convenience or safety method, to activate machinery from a distance. Electronic or electro-mechanical remote control has generally simply mapped local functions like buttons onto a control head removed from the device being controlled.

Ready availability of accelerometers, machine vision cameras and other sensors now allows the use of gesture to control or provide ambient input.

Combining the concepts and technology of Kinesthetic Gestures with fixed hardware allows remote control that begins to approach a "natural UI" and can eliminate the need for users to learn a superimposed control paradigm or set of commands. While these have so far mostly been used to communicate with fixed devices such as TV-dispay game systems, they may be able to be used as a method of interacting in contextually-difficult scenarios like driving, walking, presenting, or in dirty or dangerous work environments.

Variations

You will find Remote Gestures may be used for:

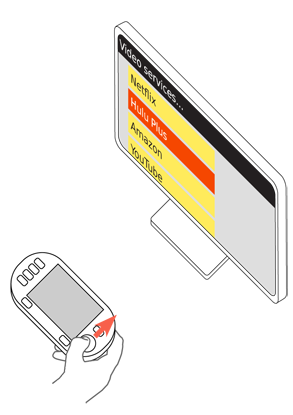

Pointing -- Direct control over a cursor or other position-indicating item on the remote screen.

Gesturing -- Direct, but limited control over portions of the interface, via gestures that map to obvious physical actions like scrolling.

Sensing -- The user, or the device as a proxy for the user, is sensed for ambient response purposes, much as the User Movement and User Proximity methods are used in Kinesthetic Gestures.

Additional methods, such as initiating processes, may emerge, but as implemented now are covered under Kinesthetic Gestures.

Methods are very simply divided between device pointing and non-device gestures.

Remote device -- A hand-held or hand-mounted device is used to accept the commands and send them to another device some distance away. the hand-held device may be a dedicated piece of hardware or be a mobile phone or similar general-purpose computer operating in a specific mode. Most clearly exemplified by the Wii, though many other devices use some portion of this control methodology, including some industrial devices, and "3D mice."

User -- User gestures, including hand, head and body position, speed, attitude, orientation and configuration are directly detected by the target device. Most exemplified by the X-Box Kinect, this is also used transparently to provide Simulated 3D Effects on some mobile and handheld devices.

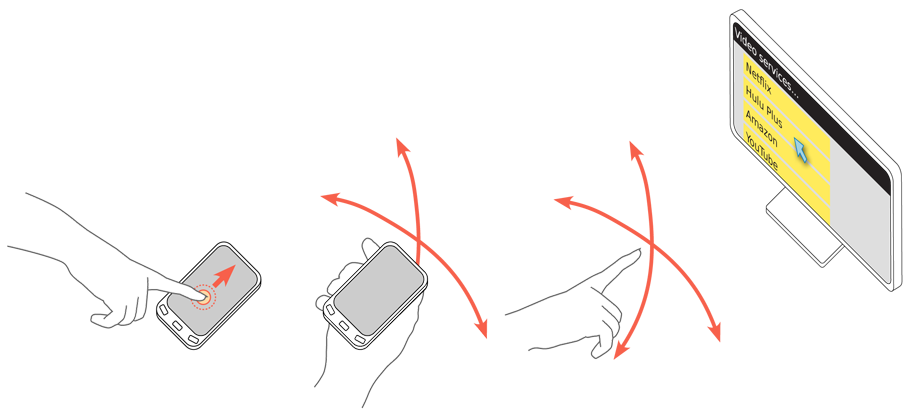

This pattern is mostly concerned with gestures themselves, and does not explicitly describe the use of buttons, Directional Entry pads or other interactivity on a remote control. These interactions, in general, will be similar to those on self-contained hardware, but an additional interaction will occur on the remote device, as described below. For example, if On-screen Gestures are used as the pointing control, the handheld device will accept the input and display output as appropriate on the device in hand, and a cursor and output will appear on the remote display as well.

For certain devices, or certain portions of the control set, gestures will simply not be suitable or will not provide a sufficient number of functions. You might need to use buttons, Directional Entry pads, Keyboards & Keypads or other devices. Use the most appropriate control method for the particular required input.

Interaction Details

Pointing may be used to control on-screen buttons, access virtual keyboards and perform other on-screen actions as discussed in the remainder of this book. When touch or pen controls are referred to, here or elsewhere, a remote pointing gesture control can generally perform the same input.

Remote pointing varies from touch or most pen input, in that the pointer is not generally active but simply points. A "mouse button" or ok/enter key -- or another gesture -- must be used to initiate commands, much as a mouse in desktop computing.

Make control gestures interact directly with the screen. When the hand is used (without a remote device), a finger may be sensed as a pointer, but an open hand as a gesture. In this case, if the user moves up and down with an extended finger, you can make the cursor moves. However, moving up and down with the hand open will be perceived as a gesture and scroll the page. Two methods are provided without a need for traditional Mode Switches or addressing specific regions of the screen.

Decisions on the mapping of gesture to control are critical. You must make them natural, and related to the physical condition being simulated. Scroll, for example, simulates the screen being an actual object, with only a portion visible through the viewport. Interactions that have less-obvious relationships should simply resort to pointing, and use buttons or other control methods.

Some simple controls may still need to be mapped to buttons due to cultural familiarity. A switch from "day" to "night" display mode may be perceived as similar to turning on the lights. Even if required often, a gesture will not map easily, as the expected condition is one of "flipping a light switch."

Sensing is very specific to the types of input being sensed, so cannot be easily discussed in a brief outline such as this.

Presentation Details

For navigating or pointing, always provide a Cursor. Focus may also be indicated as required, for entry fields or other regions. Just use the scroll-and-select paradigms. The nature of the pointing device requires a precise cursor to be always used, even if a fairly small focus region is also highlighted. See the Focus & Cursors pattern for details on the differences, and implementation.

When a Directional Control is also available (such as a 5-way pad on top of the remote), you may need to switch between modes. When the buttons are used, the cursor disappears. Preserving the focus paradigm in all modes will help avoid confusion and refreshing of the interface without deliberate user input.

For direct control, such as of a game avatar, the element under control immediately reflects the user actions, so acts as the cursor. You are likely to find that a superimposed arrow cursor is not important. If the avatar departs the screen for any reason, a cursor must appear again, even if only to indicate where the avatar is relative to the viewport, and that control input is still being received.

When sensing is being used, an input indicator should be shown, so the user is aware that sensing is occurring. Ideally, input constraints should be displayed graphically. For example, as a bar of total sense-able range, with a clearly-defined "acceptable input" area in the middle.

When a gestural input is not as obvious as a cursor, you have to communicate the method of control. The best methods of this are in games, where first-use presents "practice levels" where only a single type of action is required (or sometimes even allowed) at a time, accompanied by instructional text. The user is generally given a warning before entering the new control mode, and until that time either has conventional pointing control or can press an obvious button (messaged on screen) to exit or perform other actions. This single action is often something like learning to control a single axis of movement, before any other actions are introduced.

When game-like instruction of this sort is not suitable, another type of on-screen instruction should be given, such as a Tooltip or Annotation, often with an overlay, graphically describing the gesture. These should no longer be offered once the user has successfully performed the action, and you must always allow the user to dismiss them. The same content must either be able to be turned back on, or be made available in a help menu.

Antipatterns

Be careful relying on Remote Gestures for the primary interface, and for large stretches of input. Despite looking cool in movies, user movement as the primary input method is inherently tiring. If required for workplaces, repetitive stress injuries are likely to emerge.

Do not attempt to emulate touch-screen behaviors by allowing functions like gesture scroll when the "mouse button" is down. Instead, emphasize the innate behaviors of the system. To scroll, for example, use over-scroll detection to move the scrollable area when the pointer approaches the edge.

Be aware of the problem of pilot-induced oscillation and take steps to detect and alleviate it. PIO arises from a match between the frequency of the feedback loop in the interactive system, and the frequency of response of users. While similar behaviors can arise in other input/feedback environments, they lead to single-point errors. In aircraft, the movement of the vehicle (especially in the vertical plane) can result in the oscillation building, possibly to the point of loss of control. The key facet of many remote gesturing systems -- being maneuvered in three dimensions, in free-space -- can lead to the same issues. These cannot generally be detected during design, but must be tested for. Alleviate this via reducing the control lag, or reducing the gain in the input system. The unsuitability of a responses varies by context; games will tolerate much more "twitchy" controls than pointing for entry on a virtual keyboard.

Mode changes on the screen, such as between different types of pointing devices, should never refresh the display or reset the location in focus.

Avoid requiring the use of scroll controls, and other buttons or on-screen controls where a gestural input would work better. Such controls may be provided as backups, as indicators with secondary control only, or for the use of other types of pointing devices.

Next: Input and Selection

Discuss & Add

Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here.

Patterns and Best Practices

Others are starting to emerge!

Examples

PIO introductory article: http://dtrs.dfrc.nasa.gov/archive/00001004/01/210389v2.pdf "Introductory" isn't saying much. This is a very complex phenomenon, and is only really worked by HF black belt types, so good luck if you need to fix it without the budget for these guys.

Make a new section

Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account.