|

Size: 4376

Comment:

|

Size: 15130

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 1: | Line 1: |

| Kinesthetic gestures "Whole body motions, whole-device motions (e.g. face down=sleep), and even sensing such as speed, gait... - NOT TOO FUTURISTIC! http://gestureworks.com/features/open-source-gestures/ |

== Problem == You should always make mobile devices should react to user behaviors, movements and the relationship of the device to the user in a natural and understandable manner. |

| Line 4: | Line 4: |

| Roll handset: Raising to face, Lowering from face, Tapping two devices together, Motioning handset towards RFID, Whipping: A quick down-up motion Consider things like Wii, which turn kinestetic gestures into on-screen gestures, and other methods of remoting, and tying multiple inputs in odd ways." |

Sensors of all sorts are provided with most mobile devices, and can be used by applications and the device OS. Devices without suitable sensors, those where the sensor output is limited, may not be able to take advantage of these. Despite several attempts, web browsers so far generally will not pass sensor data to individual pages, though some can be made available with operator level agreements. |

| Line 7: | Line 6: |

| Shake shaking handset rapidly on multiple axis. Used to refresh and reload displayed information or to scatter data. | |

| Line 9: | Line 7: |

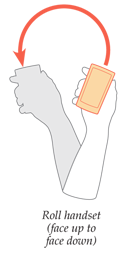

| Roll Rolling device onto it's opposite side will change it's current state. i.e. face down=sleep. | {{attachment:KinestheticGestures-Orientation.png|Orientation changes when directly accounted for by switching screen orientation are covered under their own pattern. However, other types of device orientation can be used to signal the device should perform an action, such as the relatively-common face-down to lock. Orientation is also useful when combined with other sensors to perform other activities; raising a phone to towards the user is very different when face up vs. face down.|align="right"}} == Solution == Kinesthetics is the ability to detect movement of the body; while usually applied in a sense of self-awareness of your body, here it refers to the mobile device using sensors to detect and react to proximity, action and orientation. |

| Line 11: | Line 11: |

| Tapping Tapping two handsets together can be used for file and information sharing | The most common sensor is the accelerometer, which as the name says, measures acceleration along a single axis. As utilized in modern mobile devices, accelerometers are very compact, integrated micro electro-mechanical systems, usually with detectors for all three axes mounted to a single frame. |

| Line 13: | Line 13: |

| Whipping Rapid back and forth action used to throw or cast information i,e. fishing reels used in games | In certain circles the term "accelerometer" is beginning to achieve the same sense of conflating hardware with behavior as the GPS and '''[[Location]]'''. Other sensors can and should be used for detection of '''Kinesthetic gestures''' including cameras, proximity sensors, magnetometers (compasses), audio, and close-range radios such as NFC, RFID and Bluetooth. All these sensors should be used in coordination with each other to cancel errors and detect their own piece of the world. |

| Line 15: | Line 15: |

| Raising consider more...antipattern? | Kinesthetic gesturing is largely a matter of detecting incidental or natural movements and reacting in appropriate or expected manners. Unlike on-screen gestures -- which form a language and can become abstracted very easily -- kinesthetic gesture is exclusively about the environment and how the device lives within that context. Is the device moving? In what manner? In relation to what? How close to the user, or other devices? |

| Line 17: | Line 17: |

| Lowering consider more...antipattern? | When designing mobile devices, or applications that can access sensor data, you should consider what various types of movement, proximity and orientation mean, and behave appropriately. Specific subsets of device movement such as '''[[Orientation]]''' have specialize behaviors and are covered separately. '''[[Location]]''' is also covered in it's own pattern, but both of these are only broken out as they are currently used in specific ways. Subsidiary senses of position such as those used in augmented reality and at close range (e.g. within-building location) may have some overlaps, but are not yet established enough for patterns to emerge. The use of a secondary device for communicating gesture, such as a game controller, is covered under the '''[[Remote Gestures]]''' pattern. {{attachment:KinestheticGestures-Gesture.png|Device gestures are generally true gestures, or shorthand for another action instead of attempts at direct control by pointing or scrolling. Actions best suited to these are those which are hard to perform, or for which there is no well-known on-screen gesture. Shaking to randomize or clear is a common, easy-to-use and generally fun one. |align="right"}} == Variations == Only some of the variations of this pattern are explicitly kinesthetic, and sense user movement. Others are primarily detecting position, such as other device proximity. * '''Device Orientation''' - Relative orientation to the user, or absolute orientation relative to the ground. Either incidental, or natural gestures. For example, when rotated screen down, and no other movement is detected, the device may be be set to "meeting mode" and all ringers are silenced; as the screen is the the "face" of the device, face-down means it is hidden or silenced. * '''Device Gesturing''' - Deliberate gestures, moving the device through space in a specific manner. Usually related to natural gestures, but may require some arbitrary mapping to behaviors. This may require learning. An example is the relatively common behavior of shaking to clear, reset or delete items. * '''User Movement''' - Incidental movement of the user is detected and processed to divine the user's current behavioral context. For example, gait can be detected either when the device is in hands or in a pocket; the difference between walking, running, and sometimes even restless vs. working (e.g. at a desk at work) can be detected. Vibration generally cannot be detected by accelerometers so riding in vehicles must be detected by location sensors. Other user movements including swinging the arm, such as moving a handset towards the user's head. * '''User Proximity''' - Proximity detectors, whether cameras, acoustical or infra-red, can be used to detect how close the device is to a passive object, such as the user's head. A common example is for touch-centric devices to lock the screen when approaching the user's ear, so talking on the handset does not perform accidental inputs. Moving away from the user is also detected, so the handset is unlocked without direct user input. * '''Other Device Proximity''' - Non-passive devices, such as other mobiles, or NFC card readers can be detected by the use of close-range radio transmitters. As the device approaches, it can open the appropriate application -- sharing or banking -- without user interaction. The user will generally have to complete the action, to confirm that this was not accidental, spurious or malicious. Proximity to signals or other environmental conditions further than a few inches away is not widely used as yet. When radio, audio or visual cues become commonly available they are likely to be used in the same way as these, and simply added on as another type of signal to be processed and reacted to. These methods of gesturing can initiate actions in three categories: * '''State Change''' -- The entire device reacts to a conditional change with a state change, such as the handset screen and keys locking when against the user's ear on a phone call. * '''Process Initiation''' -- Similar to state change, in that the gesture or presence of another device causes a new state to be entered. However, this is manifested as an application launch or similar action which is not a device-wide state change which must be reversed to continue; the application can be set aside temporarily at any moment. * '''Control''' -- Akin to the use of '''[[On Screen Gestures]]''' a '''Kinesthetic Gesture''' is associated with a specific action within the interface, such as shake-to-clear. Pointing and scrolling is also available, but is practically rarely used outside of game environments. {{attachment:KinestheticGestures-User.png|Various types of user proximity can be detected so the device can behave appropriately. Most common is to lock against accidental activation (when in the pocket, or against the user’s ear when on a call) and to unlock when possible. The variation shown here presumes a more stringent lock condition, and uses sensors to determine when the user is about to perform on-screen input.|align="right"}} == Interaction Details == The core of designing interactions of '''Kinesthetic Gestures''' is in determining what gestures and sensors are to be used. Not just a single gesture, but which of them, in combination, can most accurately and reliably detect the expected condition. For example, it is a good idea to lock the keypad from input when it is placed to the ear, during a call. If you tried to use any one sensor alone, that would be unreliable or unusable. If you combine accelerometers (moving towards the head) with proximity sensors or cameras (at the appropriate time of movement, an object of head size approaches), this can be made extremely reliable. Kinesthetic Gestures reinforce other contextual use of devices. Do not forget to integrate them with other known information from external sources (such as server data), location services and other observable behaviors. A meeting can be surmised from travel to a meeting location, at the time scheduled, then being placed on a table. The device could then behave appropriately, and switch to a quieter mode even if not placed face-down. You should also consider patterns of use, and adjust the reactions according to what the user actually did after a particular type of data was received. Context must be used to load correct conditions and as much relevant information as possible. When proximity to another device opens a Bluetooth exchange application, it must also automatically request to connect to the other device (not simply load a list of cryptic names from which the user must choose), and open the service type being requested. All State Change gestures should be reversible, usually with a directly opposite gesture. Removing the phone from the ear must also be sensed, so reliably and quickly it immediately unlocks so there is no delay in the use of the device. Some indication of the gesture must be presented visually. Very often, this is not explicit (such as an icon or overlay) but switches modes at a higher level. When a device is locked due to being placed face-down, or near the ear, the screen is also blanked. Aside from saving power when there is no way to read the screen anyway, this serves to signal that input is impossible in the event that it does not unlock immediately. Non-gestural methods must be available to remove or reverse state-changing gestures. For example, if sensors do not unlock the device in the examples above, the conventional unlock method (e.g. power/lock button) must be enabled. Generally, this means that '''Kinesthetic Gestures''' should simply be shortcuts to existing features. For Process Initiation, usually used with transactional features such as NFC payment, the gesture towards the reader should be considered to be the same as the user selecting an on-screen function to open a shortcut; this is simply the first step of the process. Additional explicit actions must be taken to confirm the remainder of the transaction. '''Kinesthetic gestures''' are not as well known as '''[[On-Screen Gestures]]''' so may be unexpected to some users. Settings should be provided to disable (or enable) certain behaviors. {{attachment:KinestheticGestures-Device.png|Two types of proximity to other devices exist. In the first, the devices are broadly equal and activity may be one way (sending a file) or two way (exchange contact inforation). In the second, the user’s mobile device is one-side of a transaction, and the other is a terminal such as a payment or identity system. |align="right"}} == Presentation Details == When reacting to another device, such as an NFC reader, make sure the positional relationship between the devices is implied (or explicitly communicated) by position of elements on the screen. When gesture initiates a process, use '''[[Tones]]''', '''[[Voice Notifications]]''' and '''[[Haptic Output]]''', along with on-screen displays, to notify the user that the application has opened. Especially for functions with financial liability, this will reassure the user that the device cannot take action without them. When a sensor is obvious, and '''[[LED]]''' may be used on or adjacent to the apparent location of the sensor. For the NFC reader example, an icon will often accompany the sensor, and can be additionally illuminated when it is active. This is used in much the same manner as a light on a camera, so surreptitious use of the sensor is avoided. This is already common on the "PIN Pad" readers for NFC cards, and when handsets carry NFC, they should use similar methods. When using '''Kinesthetic Gestures''' for control, such as scrolling a page, you should include an on-screen display that scrolling is deliberately active. You can use this to reinforce the reason the device is scrolling, and for the user to interact with. Especially if the indicator shows the degree of the gesture, the user can balance not just the output (scroll speed) but directly have a way to control the gesture. Kinesthetic Gestures are not expected in mobile devices, so users will have to be educated as to their purpose, function and interaction. This can be done with: * Advertising. * Printed information in the box (when available. * First-run demonstrations. * First-run (or early-life) '''[[Tooltip]]''' hints. * Describing the function well in settings panels. * Leaving on by default, and explaining the function via Tooltip. An '''[[Annotation]]''' may be preferred for some cases, so the user can immediately change or disable the function. == Antipatterns == Whole-device '''Kinesthetic Gestures''' can also be used as a pointer, or method of scrolling. These are not yet consistently applied, so require instruction, icons and overlays to explain and indicate when the effect is being used, and controls to disable them when not desired. Their use in games (think of rolling the ball into a pocket types) is due to the difficultly of control; this should indicate that using gestural pointing as a matter of course can be troublesome. Avoid using graphics which indicate position when they cannot be made to reflect the actual relationship. For example, do not use a graphic that shows data transferring between devices next to each other, if the remote device can only connect through a radio clearly located on the bottom of the device. Use caution designing these gestures. Actions and their gestures must be carefully mapped to be sensible, and not conflict with other gestures. Shake to send, for example, makes no sense. But a "flick away" to send data would be okay, like casting a fishing line, it implies something departs the user's device and is sent into the distance. Since information is often visualized as an object, extending the object metaphor tends to work. |

| Line 23: | Line 89: |

| == Problem == Mobile devices should react to user behaviors or... and something... == Solution == Kinesthetics is the ability to detect movement of the body; while usually applied in a self-aware sense, here it refers to the mobile device using sensors to detect and react to proximity, action and orientation. The most common sensor is the accelerometer, which as the name says, measures acceleration along a single axis. As utilized in modern mobile devices, accelerometers are very compact, integrated micro electro-mechanical systems, usually with detectors for all three axes mounted to a single frame. In certain circles the term "accelerometer" is beginning to achieve the same sense of conflating hardware with behavior as the GPS and location. Other sensors can and should be used for detection of '''Kinesthetic gestures''' including cameras, proximity sensors, magnetometers (compasses), and close-range radios such as NFC, RFID and Bluetooth. Kinesthetic gesturing is largely a matter of detecting incidental or natural movements and reacting in appropriate or expected manners. Unlike on-screen gestures -- which form a language and can become abstracted very easily -- kinesthetic gesture is about context. Is the device moving? In what manner? In relation to what? How close to the user, or other devices? Design of mobile devices should consider what various types of movement, proximity and orientation mean, and behave appropriately. Specific subsets of device movement such as '''[[Orientation]]''' have specialize behaviors and are covered separately. '''[[Location]]''' is also covered separately. Subsidiary senses of position such as those used in augmented reality and at close range (e.g. within-building location) may have some overlaps, but are not yet established enough for patterns to emerge. The use of a secondary device for communicating gesture, such as a game controller, is covered under the '''[[Remote Gestures]]''' pattern. , as opposed to the use of gesture as a is about not position, but context. How is it moving, in relation to what... etc. ...Not just accelerometers, but use of cameras, NFC/RFID devices, etc. all in coordination... == Variations == * '''Device Orientation''' - Flipping it over, for example... face-down=sleep * '''Device Gesturing''' - Shaking, etc. deliberate gestures... * '''User Movement''' - Incidental movement you grab for context, like walking vs running vs sitting detection... * '''User Proximity''' - Moving towards your head it locks, and so on... * '''Other Device Proximity''' - Approaching another handset or an NFC reader; note that you don't have to mention as more future... Proximity to Signals -- like audio detection on car radios? RFID, card readers....FUTURE, orientation of detectors on handset? Cultures? == Interaction Details == do action??? '''Kinesthetic gestures''' are not as ubiquitous as '''[[On-Screen Gestures]]''' so may be unexpected to some users. Settings should be provided to disable (or enable) certain behaviors. == Presentation Details == when reacting to a proximate other device, present the relationship on screen when possible... when known, display items on the screen adjacent to the location of the adjacent device... == Antipatterns == |

---- Next: '''[[Remote Gestures]]''' ---- = Discuss & Add = Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here. |

| Line 74: | Line 96: |

| If you want to add examples (and we occasionally do also) add them here. == Make a new section == Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account. |

Problem

You should always make mobile devices should react to user behaviors, movements and the relationship of the device to the user in a natural and understandable manner.

Sensors of all sorts are provided with most mobile devices, and can be used by applications and the device OS. Devices without suitable sensors, those where the sensor output is limited, may not be able to take advantage of these. Despite several attempts, web browsers so far generally will not pass sensor data to individual pages, though some can be made available with operator level agreements.

Solution

Kinesthetics is the ability to detect movement of the body; while usually applied in a sense of self-awareness of your body, here it refers to the mobile device using sensors to detect and react to proximity, action and orientation.

The most common sensor is the accelerometer, which as the name says, measures acceleration along a single axis. As utilized in modern mobile devices, accelerometers are very compact, integrated micro electro-mechanical systems, usually with detectors for all three axes mounted to a single frame.

In certain circles the term "accelerometer" is beginning to achieve the same sense of conflating hardware with behavior as the GPS and Location. Other sensors can and should be used for detection of Kinesthetic gestures including cameras, proximity sensors, magnetometers (compasses), audio, and close-range radios such as NFC, RFID and Bluetooth. All these sensors should be used in coordination with each other to cancel errors and detect their own piece of the world.

Kinesthetic gesturing is largely a matter of detecting incidental or natural movements and reacting in appropriate or expected manners. Unlike on-screen gestures -- which form a language and can become abstracted very easily -- kinesthetic gesture is exclusively about the environment and how the device lives within that context. Is the device moving? In what manner? In relation to what? How close to the user, or other devices?

When designing mobile devices, or applications that can access sensor data, you should consider what various types of movement, proximity and orientation mean, and behave appropriately.

Specific subsets of device movement such as Orientation have specialize behaviors and are covered separately. Location is also covered in it's own pattern, but both of these are only broken out as they are currently used in specific ways. Subsidiary senses of position such as those used in augmented reality and at close range (e.g. within-building location) may have some overlaps, but are not yet established enough for patterns to emerge.

The use of a secondary device for communicating gesture, such as a game controller, is covered under the Remote Gestures pattern.

Variations

Only some of the variations of this pattern are explicitly kinesthetic, and sense user movement. Others are primarily detecting position, such as other device proximity.

Device Orientation - Relative orientation to the user, or absolute orientation relative to the ground. Either incidental, or natural gestures. For example, when rotated screen down, and no other movement is detected, the device may be be set to "meeting mode" and all ringers are silenced; as the screen is the the "face" of the device, face-down means it is hidden or silenced.

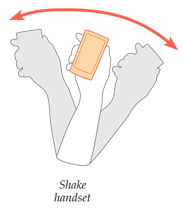

Device Gesturing - Deliberate gestures, moving the device through space in a specific manner. Usually related to natural gestures, but may require some arbitrary mapping to behaviors. This may require learning. An example is the relatively common behavior of shaking to clear, reset or delete items.

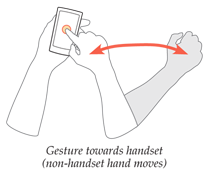

User Movement - Incidental movement of the user is detected and processed to divine the user's current behavioral context. For example, gait can be detected either when the device is in hands or in a pocket; the difference between walking, running, and sometimes even restless vs. working (e.g. at a desk at work) can be detected. Vibration generally cannot be detected by accelerometers so riding in vehicles must be detected by location sensors. Other user movements including swinging the arm, such as moving a handset towards the user's head.

User Proximity - Proximity detectors, whether cameras, acoustical or infra-red, can be used to detect how close the device is to a passive object, such as the user's head. A common example is for touch-centric devices to lock the screen when approaching the user's ear, so talking on the handset does not perform accidental inputs. Moving away from the user is also detected, so the handset is unlocked without direct user input.

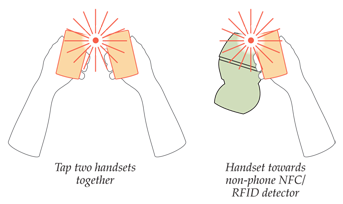

Other Device Proximity - Non-passive devices, such as other mobiles, or NFC card readers can be detected by the use of close-range radio transmitters. As the device approaches, it can open the appropriate application -- sharing or banking -- without user interaction. The user will generally have to complete the action, to confirm that this was not accidental, spurious or malicious.

Proximity to signals or other environmental conditions further than a few inches away is not widely used as yet. When radio, audio or visual cues become commonly available they are likely to be used in the same way as these, and simply added on as another type of signal to be processed and reacted to.

These methods of gesturing can initiate actions in three categories:

State Change -- The entire device reacts to a conditional change with a state change, such as the handset screen and keys locking when against the user's ear on a phone call.

Process Initiation -- Similar to state change, in that the gesture or presence of another device causes a new state to be entered. However, this is manifested as an application launch or similar action which is not a device-wide state change which must be reversed to continue; the application can be set aside temporarily at any moment.

Control -- Akin to the use of On Screen Gestures a Kinesthetic Gesture is associated with a specific action within the interface, such as shake-to-clear. Pointing and scrolling is also available, but is practically rarely used outside of game environments.

Interaction Details

The core of designing interactions of Kinesthetic Gestures is in determining what gestures and sensors are to be used. Not just a single gesture, but which of them, in combination, can most accurately and reliably detect the expected condition. For example, it is a good idea to lock the keypad from input when it is placed to the ear, during a call. If you tried to use any one sensor alone, that would be unreliable or unusable. If you combine accelerometers (moving towards the head) with proximity sensors or cameras (at the appropriate time of movement, an object of head size approaches), this can be made extremely reliable.

Kinesthetic Gestures reinforce other contextual use of devices. Do not forget to integrate them with other known information from external sources (such as server data), location services and other observable behaviors. A meeting can be surmised from travel to a meeting location, at the time scheduled, then being placed on a table. The device could then behave appropriately, and switch to a quieter mode even if not placed face-down. You should also consider patterns of use, and adjust the reactions according to what the user actually did after a particular type of data was received.

Context must be used to load correct conditions and as much relevant information as possible. When proximity to another device opens a Bluetooth exchange application, it must also automatically request to connect to the other device (not simply load a list of cryptic names from which the user must choose), and open the service type being requested.

All State Change gestures should be reversible, usually with a directly opposite gesture. Removing the phone from the ear must also be sensed, so reliably and quickly it immediately unlocks so there is no delay in the use of the device.

Some indication of the gesture must be presented visually. Very often, this is not explicit (such as an icon or overlay) but switches modes at a higher level. When a device is locked due to being placed face-down, or near the ear, the screen is also blanked. Aside from saving power when there is no way to read the screen anyway, this serves to signal that input is impossible in the event that it does not unlock immediately.

Non-gestural methods must be available to remove or reverse state-changing gestures. For example, if sensors do not unlock the device in the examples above, the conventional unlock method (e.g. power/lock button) must be enabled. Generally, this means that Kinesthetic Gestures should simply be shortcuts to existing features.

For Process Initiation, usually used with transactional features such as NFC payment, the gesture towards the reader should be considered to be the same as the user selecting an on-screen function to open a shortcut; this is simply the first step of the process. Additional explicit actions must be taken to confirm the remainder of the transaction.

Kinesthetic gestures are not as well known as On-Screen Gestures so may be unexpected to some users. Settings should be provided to disable (or enable) certain behaviors.

Presentation Details

When reacting to another device, such as an NFC reader, make sure the positional relationship between the devices is implied (or explicitly communicated) by position of elements on the screen.

When gesture initiates a process, use Tones, Voice Notifications and Haptic Output, along with on-screen displays, to notify the user that the application has opened. Especially for functions with financial liability, this will reassure the user that the device cannot take action without them.

When a sensor is obvious, and LED may be used on or adjacent to the apparent location of the sensor. For the NFC reader example, an icon will often accompany the sensor, and can be additionally illuminated when it is active. This is used in much the same manner as a light on a camera, so surreptitious use of the sensor is avoided. This is already common on the "PIN Pad" readers for NFC cards, and when handsets carry NFC, they should use similar methods.

When using Kinesthetic Gestures for control, such as scrolling a page, you should include an on-screen display that scrolling is deliberately active. You can use this to reinforce the reason the device is scrolling, and for the user to interact with. Especially if the indicator shows the degree of the gesture, the user can balance not just the output (scroll speed) but directly have a way to control the gesture.

Kinesthetic Gestures are not expected in mobile devices, so users will have to be educated as to their purpose, function and interaction. This can be done with:

- Advertising.

- Printed information in the box (when available.

- First-run demonstrations.

First-run (or early-life) Tooltip hints.

- Describing the function well in settings panels.

Leaving on by default, and explaining the function via Tooltip. An Annotation may be preferred for some cases, so the user can immediately change or disable the function.

Antipatterns

Whole-device Kinesthetic Gestures can also be used as a pointer, or method of scrolling. These are not yet consistently applied, so require instruction, icons and overlays to explain and indicate when the effect is being used, and controls to disable them when not desired. Their use in games (think of rolling the ball into a pocket types) is due to the difficultly of control; this should indicate that using gestural pointing as a matter of course can be troublesome.

Avoid using graphics which indicate position when they cannot be made to reflect the actual relationship. For example, do not use a graphic that shows data transferring between devices next to each other, if the remote device can only connect through a radio clearly located on the bottom of the device.

Use caution designing these gestures. Actions and their gestures must be carefully mapped to be sensible, and not conflict with other gestures. Shake to send, for example, makes no sense. But a "flick away" to send data would be okay, like casting a fishing line, it implies something departs the user's device and is sent into the distance. Since information is often visualized as an object, extending the object metaphor tends to work.

Next: Remote Gestures

Discuss & Add

Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here.

Examples

If you want to add examples (and we occasionally do also) add them here.

Make a new section

Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account.