|

Size: 9078

Comment:

|

← Revision 17 as of 2013-09-19 15:53:21 ⇥

Size: 12023

Comment:

|

| Deletions are marked like this. | Additions are marked like this. |

| Line 2: | Line 2: |

| Non-verbal auditory tones must be used to provide feedback or alert users to conditions or events, but must not becoming confusing, lost in the background or so frequent that critical alerts are disregarded. | You must use non-verbal auditory tones to provide feedback or alert users to conditions or events, without them becoming confusing, lost in the background or so frequent that critical alerts are disregarded. Practically all mobile devices have audio output of some sort, and it can be accessed by almost every application or website. There can be strict limits, such as devices which only output over headsets, or those which only send phone call audio over Bluetooth, that can limit the use of some tones. |

| Line 5: | Line 7: |

| {{attachment:Tones-Click.png|Feedback tones provide confirmation that the target was contacted correctly, and that the device accepted the input. This is especially helpful for touch and pen devices, which have little or no tacticle feedback, unlike hardware keys which move and (generally) click as part of the key action. Feedback has developed over time as a result of keys being obscured by the user’s finger or hand, so visual feedback is of limited value. |align="right"}} | |

| Line 6: | Line 9: |

| Interaction design, like much design work, focuses heavily on the visual components. However, users of all devices have a multitide of senses, all of which can and should be engaged to provide a more complete experience. | Interaction design, like much design work, focuses heavily on the visual components. However, users of all devices have a multitude of senses, all of which can and should be engaged to provide a more complete experience. |

| Line 8: | Line 11: |

| Mobiles are carried all the time, often out of sight, and must use '''Tones'' and '''[[Haptic Output]]''' to get the user's attention, or to communicate information more rapidly and clearly. Tones should be used not just for alerting, but as a secondary channel to emphasize successful input, such as on-screen and hardware button presses, scrolls and other interactions. | Mobiles are carried all the time, often out of sight, and must use audio alerting and '''[[Haptic Output]]''' to get the user's attention, or to communicate information more rapidly and clearly. '''Tones''' should be used not just for alerting, or for problems, but as a secondary channel to emphasize successful input, such as on-screen and hardware button presses, scrolls and other interactions. |

| Line 12: | Line 15: |

| Whenever implementing this pattern, be aware of the danger of the "better safe than sorry" approach to auditory '''Tones'''. Too much sound can be as bad as too little by adding confusion or inducing "alarm fatigue." At it's worse, this can be akin to "banner blindness" with some or all device tones simply edited out by the user's brain. | Whenever implementing this pattern, be aware of the danger of the "better safe than sorry" approach to auditory '''Tones'''. Too much sound can be as bad as too little by adding confusion or inducing "alarm fatigue." At it's worse, this can be akin to the well-known phenomenon of "banner blindness" with some or all device tones simply edited out by the user's brain. |

| Line 16: | Line 19: |

| Feedback tones are used to provide direct response to a user action. The tone serves as an alternate and immediate feedback that the user action has been accepted by the system. This is usually a direct action such as a button press or gesture. Feedback developed in the machine era, as a result of the user's fingers and hands obscuring the input devices; even when a button moved, or illuminated, more efficient input was assured by the inclusion of auditory and '''[[Haptic | '''Feedback tones''' are used to provide direct response to a user action. You can serve up a tone as alternate and immediate feedback that the user action has been accepted by the system. This is usually a direct action such as a button press or gesture. This style of feedback developed in the machine era, as a result of the user's fingers and hands obscuring the input devices; even when a button moved, or illuminated, more efficient input was assured by the inclusion of auditory and '''[[Haptic Output]]'''. |

| Line 18: | Line 21: |

| Alerts serve to notify the user of a condition that occurs independent of action, such as a calendar appointment or new SMS. These also date back far before electronic systems. Due to the multitude of alarms, control systems such as aircraft and power plants are more useful antecedents to study than simple items like alarm clocks. | '''Alerts''' serve to notify the user of a condition that occurs independent of action, such as a calendar appointment or new SMS. These also date back far before electronic systems. Due to the multitude of alarms, control systems such as aircraft and power plants are more useful antecedents to study than simple items like alarm clocks. |

| Line 20: | Line 23: |

| Delayed response alerts exist in a difficult space between these two. For example, when an action is submitted, and a remote system responds with an error several seconds later. The response is in context, so is similar to a feedback tone, but needs to meet the needs of informing the user to look at the screen again, much as an alert. | '''Delayed response alerts''' exist in a difficult space between these two. For example, when an action is submitted, and a remote system responds with an error several seconds later. The response is in context, so is similar to a feedback tone, but needs to meet the needs of informing the user to look at the screen again, much as an alert. |

| Line 24: | Line 27: |

| Phenomena like the ''McGurk effect'' -- where speech comprehension is related to the visual component -- appear to exist for other types of perception. Tones should support interaction with the visual portions of the interface. | Phenomena like the ''McGurk effect'' -- where speech comprehension is related to the visual component -- appear to exist for other types of perception. Tones should support interaction that is directly related to the visual portions of the interface. |

| Line 26: | Line 29: |

| Tones must always correspond to at least one visual or tactile component. If an alert sounds, it should always have a clear, actionable on-screen element. These should always appear in the expected manner for the type of event. Feedback tones correspond to keypresses or other actions the user takes, and alert tones should display in the conventional method the device uses for '''[[Notifications]]'''. | Tones must always correspond to at least one visual or tactile component. If an alert sounds, you should always make sure there is a clear, actionable on-screen element. These should always appear in the expected manner for the type of event. Feedback tones correspond to keypresses or other actions the user takes, and alert tones should display in the conventional method the device uses for '''[[Notifications]]'''. |

| Line 30: | Line 33: |

| Use caution with reminder alerts, or repeatedly announcing the same alert again. This should generally only be used for conditions that are time sensitive and require the user to perform a real-world action, such as an alarm clock. There is generally no need to repeatedly sound an audible alert for | Use caution with reminder alerts, or repeatedly announcing the same alert again. You should generally only used these for conditions that are time sensitive, and require the user to perform a real-world action, such as an alarm clock. There is generally no need to repeatedly sound an audible alert for normal priority items such as email or SMS messages. |

| Line 32: | Line 35: |

| Alert silencing -- removing the auditory portion, or the audible and '''[[LED]]''' blinking portion of an alert -- should be unrelated to any other notifications. It should be possible to silence an alert without dismissing the entire condition. Often, this can be done by simply opening or unlocking the device; if previously in a locked mode, the assumption can be made that the user has seen and acknowledged all conditions. | Alert silencing -- removing the auditory portion, or the audible and '''[[LED]]''' blinking portion of an alert -- should be unrelated to any other notifications. It should be possible to silence an alert without dismissing the entire condition. Often, this can be done by simply opening or unlocking the device; if previously in a locked mode, the assumption can be made that the user has seen and acknowledged all conditions. You can stop sending tones, but until the user has explicitly dismissed the message, the alert condition is marked as unread |

| Line 35: | Line 38: |

| {{attachment:Tones-Alert.png|A sample process by which alerts are displayed, with audio as a component. While the device is idle, and locked, the alert condition occurs. The device LED illuminates and the alert tone plays. The user retrieves the device, and taps the power button to illuminate the lock screen; this user action silences the audio alarm immediately, but does not cancel the alert otherwise, so the LED and notifications remain in place. Once the user unlocks the device, the notification will appear in the conventional location on the Idle screen, and can be interacted with as usual.}} | |

| Line 36: | Line 40: |

| The tone must correspond to the class and type of action and should imply something about the action by the type of tone. Clicks denote keypresses, whereas buzzers denote errors for example. | Any '''Tones''' you send must correspond to the class and type of action, and should imply something about the action by the type of tone. Clicks denote keypresses, whereas buzzers denote errors, for example. |

| Line 38: | Line 42: |

| Sound design is an entire field of study. If sounds must be created from scratch, a sound designer should be hired to develop the tones based on sound principles. Be sure to denote the meaning -- and emotional characteristics -- for each sound. | Sound design is an entire field of study. If sounds must be created from scratch, a sound designer should be hired to develop the tones based on existing principles. Be sure to denote the meaning -- and emotional characteristics -- for each sound. |

| Line 40: | Line 44: |

| Vibration and audio are closely coupled, so should be considered hand in hand. If '''Tones''' are used in concert with '''[[Haptic Output]]''', assure they do not conflict with each other. For devices without haptic or vibration hardware, audio can serve this need in a limited manner. Short, sharp tones provided through the device speaker can provide a tactile response greater that their audio response. | Vibration and audio are closely coupled, so should be considered hand in hand. If '''Tones''' are used in concert with '''[[Haptic Output]]''', assure they do not conflict with each other. For devices without haptic or vibration hardware, audio can serve this need in a limited manner. Short, sharp tones, and especially those at very low frequencies, provided through the device speaker can provide a tactile response greater that their audio response. Test on the actual hardware to be used, to make sure the device has appropriate responsiveness, and that low frequency tones do not induce unwanted buzzing. |

| Line 42: | Line 46: |

| The ''illusory continuity of tones'' can be exploited in a manner similar to how ''persistence of vision'' is for video playback. Simple tones can be output with gaps or in a rapid stairstep and be perceived to be a continuous tone. This is especially to simulate higher fidelity audio than can be achieved on devices with constrained processing hardware. | The ''illusory continuity of tones'' can be exploited in a manner similar to how ''persistence of vision'' is for video playback. Simple tones can be output with gaps or in a rapid stairstep and be perceived to be a continuous tone. You can use this to simulate higher fidelity audio than can be achieved on devices with constrained processing hardware, or when the rest of your application is using so much of the processor power that you need to make components like the audio output more efficient. |

| Line 44: | Line 48: |

| Do not allow alerts or other '''Tones''' to over-ride voice communications. Many other types of audio output, such as video or music playback, may also demand some tones be greatly reduced or entirely eliminated. Do not play multiple tones at one time. | Do not allow alerts or other '''Tones''' to over-ride voice communications. Many other types of audio output, such as video or music playback, may also demand some tones be greatly reduced or entirely eliminated. |

| Line 46: | Line 50: |

| Generally, alerts can be grouped. If three calendar appointments occur at the time time, only one alert '''Tone''' needs to be played, not three in sequence. Never play more than one tone at a time. | Generally, alerts can be grouped. If three calendar appointments occur at the time time, only one alert '''Tone''' needs to be played, not three in sequence. Never play more than one tone at a time. You only need to get the user's attention once for them to glance at the screen and see the details. |

| Line 48: | Line 52: |

| Use caution with the display of multiple alerts at closely following each other. If the user has not acknowledged an incoming SMS, and a new voicemail arrives moments later, there may be no need to play the second alert tone. The delay time required to play a second alert '''Tone''' is dependent on the user, the type of alert, and the context of use. | Use caution with the display of multiple alerts which closely following each other. If the user has not acknowledged an incoming SMS, and a new voicemail arrives moments later, there may be no need to play the second alert tone. The delay time required to play a second alert '''Tone''' is dependent on the user, the type of alert, and the context of use. |

| Line 50: | Line 54: |

| Carefully consider audibility, or how any sound can be perceived as separate from the background. Automatic adjustments may be used by opening the microphone and analyzing ambient noise. However, do not simply make sounds louder to compensate for this, but adjust tone or select pre-built variations more appropriate to the environment. | Carefully consider audibility, or how any sound can be perceived as separate from the background. Automatic adjustments may be used by opening the microphone and analyzing ambient noise. However, do not simply make sounds louder to compensate for this. Adjust the pitch or select pre-built variations more appropriate to the environment. |

| Line 52: | Line 56: |

| Be aware of the relationship between pitch and sound pressure, and adjust based on output volume. Consider using auditory patterns (or tricks) like ''Sheppard-Risset'' tones to simulate rising or falling tones. | Be aware of the relationship between pitch and sound pressure, and adjust based on output volume. Consider using auditory patterns (or tricks) like ''Sheppard-Risset'' tones to simulate rising or falling tones, which can be more easily picked out of the background, or located in space. |

| Line 56: | Line 60: |

| Alarm fatigue is the greatest risk when designing notification or response '''Tones'''. If tones are too generic, too common or too similar to other tones in the environment (whether similar to natural sounds or electronic tones from other devices) they can be discounted by the user. Use as few different sounds as possible. This may be deliberate, where the user consciously discards "yet another alert" for a time, or unconscious as the user's auditory center eventually filters out repeated tones as noise. This can even result in users sleeping through quite loud alarms due to the tone being considered noise by their brain. |

Alarm fatigue is the greatest risk when designing notification or response '''Tones'''. If your audio output is too generic, too common, or too similar to other tones in the environment (whether similar to natural sounds or electronic tones from other devices) they can be discounted by the user. Use as few different sounds as possible. This may be deliberate, where the user consciously discards "yet another alert" for a time, or unconscious as the user's auditory center eventually filters out repeated tones as noise. This can even result in users sleeping through quite loud alarms due to the tone being considered noise by their brain. |

| Line 64: | Line 66: |

== Examples == |

Keep in mind that users may be wearing headsets. Using tones to emulate '''[[Haptic Response]]''' will not work in this case, and can be very annoying. Consider changing the output when headsets are in use. In addition, some headsets will not accept all output, so if your application relies on tones, make sure there is a way to have the device still make these sounds. |

| Line 69: | Line 70: |

| Just some research. Mention there's lots and lots around all this, and that much of it is for cockpits and so on. Places where there's noise, or distracted users. See Dark Cockpit (or something) principle for something else interesting. Anyway, make sure folks get referred to the various research. Please feel free to pick other references. I skimmed these, and am not happiest with them, just didn't want to pay $30 for each of several more important papers. REFERENCE: http://www.jstor.org/pss/55320 REFERENCE: http://ftp.rta.nato.int/public//PubFullText/RTO/MP/RTO-MP-HFM-123///MP-HFM-123-24.pdf REFERENCE: http://www.sigchi.org/chi96/proceedings/shortpap/Beaudoin-Lafon/Mbl_txt.htm?searchterm=tones REFERENCE: http://www.acm.org/uist/archive/adjunct/2002/pdf/posters/p21-read.pdf?searchterm=Auditory+Warning Fun reference, but maybe not a "reference" http://catless.ncl.ac.uk/Risks/26.35.html#subj15 |

---- Next: '''[[Voice Input]]''' ---- = Discuss & Add = Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here. == Sonifiction == Check this out: * [[http://sonification.de/handbook/|The Sonification Handbook]] Sonification conveys information by using non-speech sounds. To listen to data as sound and noise can be a surprising new experience with diverse applications ranging from novel interfaces for visually impaired people to data analysis problems in many scientific fields. This book gives a solid introduction to the field of auditory display, the techniques for sonification, suitable technologies for developing sonification algorithms, and the most promising application areas. The book is accompanied by the online repository of sound examples. == Examples == If you want to add examples (and we occasionally do also) add them here. == Make a new section == Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account. |

Problem

You must use non-verbal auditory tones to provide feedback or alert users to conditions or events, without them becoming confusing, lost in the background or so frequent that critical alerts are disregarded.

Practically all mobile devices have audio output of some sort, and it can be accessed by almost every application or website. There can be strict limits, such as devices which only output over headsets, or those which only send phone call audio over Bluetooth, that can limit the use of some tones.

Solution

Interaction design, like much design work, focuses heavily on the visual components. However, users of all devices have a multitude of senses, all of which can and should be engaged to provide a more complete experience.

Mobiles are carried all the time, often out of sight, and must use audio alerting and Haptic Output to get the user's attention, or to communicate information more rapidly and clearly. Tones should be used not just for alerting, or for problems, but as a secondary channel to emphasize successful input, such as on-screen and hardware button presses, scrolls and other interactions.

Alerts are used to regain user focus on the device. Additional communication can then be made to the user, usually visually, with on-screen display of Notifications or other features. However, this may also involve Voice Readback or initiating Voice Reminders.

Whenever implementing this pattern, be aware of the danger of the "better safe than sorry" approach to auditory Tones. Too much sound can be as bad as too little by adding confusion or inducing "alarm fatigue." At it's worse, this can be akin to the well-known phenomenon of "banner blindness" with some or all device tones simply edited out by the user's brain.

Variations

Feedback tones are used to provide direct response to a user action. You can serve up a tone as alternate and immediate feedback that the user action has been accepted by the system. This is usually a direct action such as a button press or gesture. This style of feedback developed in the machine era, as a result of the user's fingers and hands obscuring the input devices; even when a button moved, or illuminated, more efficient input was assured by the inclusion of auditory and Haptic Output.

Alerts serve to notify the user of a condition that occurs independent of action, such as a calendar appointment or new SMS. These also date back far before electronic systems. Due to the multitude of alarms, control systems such as aircraft and power plants are more useful antecedents to study than simple items like alarm clocks.

Delayed response alerts exist in a difficult space between these two. For example, when an action is submitted, and a remote system responds with an error several seconds later. The response is in context, so is similar to a feedback tone, but needs to meet the needs of informing the user to look at the screen again, much as an alert.

Interaction Details

Phenomena like the McGurk effect -- where speech comprehension is related to the visual component -- appear to exist for other types of perception. Tones should support interaction that is directly related to the visual portions of the interface.

Tones must always correspond to at least one visual or tactile component. If an alert sounds, you should always make sure there is a clear, actionable on-screen element. These should always appear in the expected manner for the type of event. Feedback tones correspond to keypresses or other actions the user takes, and alert tones should display in the conventional method the device uses for Notifications.

Displayed notifications must not disappear without deliberate user input. Users may not be able to see the screen or act on a particular alert for some time; the visual display to explain the alert must remain in place.

Use caution with reminder alerts, or repeatedly announcing the same alert again. You should generally only used these for conditions that are time sensitive, and require the user to perform a real-world action, such as an alarm clock. There is generally no need to repeatedly sound an audible alert for normal priority items such as email or SMS messages.

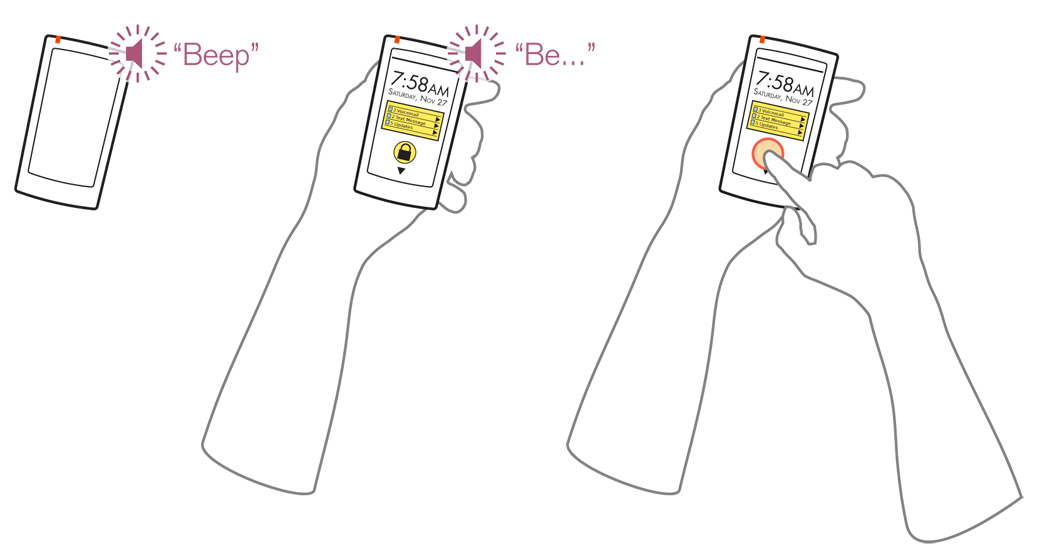

Alert silencing -- removing the auditory portion, or the audible and LED blinking portion of an alert -- should be unrelated to any other notifications. It should be possible to silence an alert without dismissing the entire condition. Often, this can be done by simply opening or unlocking the device; if previously in a locked mode, the assumption can be made that the user has seen and acknowledged all conditions. You can stop sending tones, but until the user has explicitly dismissed the message, the alert condition is marked as unread

Presentation Details

Any Tones you send must correspond to the class and type of action, and should imply something about the action by the type of tone. Clicks denote keypresses, whereas buzzers denote errors, for example.

Sound design is an entire field of study. If sounds must be created from scratch, a sound designer should be hired to develop the tones based on existing principles. Be sure to denote the meaning -- and emotional characteristics -- for each sound.

Vibration and audio are closely coupled, so should be considered hand in hand. If Tones are used in concert with Haptic Output, assure they do not conflict with each other. For devices without haptic or vibration hardware, audio can serve this need in a limited manner. Short, sharp tones, and especially those at very low frequencies, provided through the device speaker can provide a tactile response greater that their audio response. Test on the actual hardware to be used, to make sure the device has appropriate responsiveness, and that low frequency tones do not induce unwanted buzzing.

The illusory continuity of tones can be exploited in a manner similar to how persistence of vision is for video playback. Simple tones can be output with gaps or in a rapid stairstep and be perceived to be a continuous tone. You can use this to simulate higher fidelity audio than can be achieved on devices with constrained processing hardware, or when the rest of your application is using so much of the processor power that you need to make components like the audio output more efficient.

Do not allow alerts or other Tones to over-ride voice communications. Many other types of audio output, such as video or music playback, may also demand some tones be greatly reduced or entirely eliminated.

Generally, alerts can be grouped. If three calendar appointments occur at the time time, only one alert Tone needs to be played, not three in sequence. Never play more than one tone at a time. You only need to get the user's attention once for them to glance at the screen and see the details.

Use caution with the display of multiple alerts which closely following each other. If the user has not acknowledged an incoming SMS, and a new voicemail arrives moments later, there may be no need to play the second alert tone. The delay time required to play a second alert Tone is dependent on the user, the type of alert, and the context of use.

Carefully consider audibility, or how any sound can be perceived as separate from the background. Automatic adjustments may be used by opening the microphone and analyzing ambient noise. However, do not simply make sounds louder to compensate for this. Adjust the pitch or select pre-built variations more appropriate to the environment.

Be aware of the relationship between pitch and sound pressure, and adjust based on output volume. Consider using auditory patterns (or tricks) like Sheppard-Risset tones to simulate rising or falling tones, which can be more easily picked out of the background, or located in space.

Antipatterns

Alarm fatigue is the greatest risk when designing notification or response Tones. If your audio output is too generic, too common, or too similar to other tones in the environment (whether similar to natural sounds or electronic tones from other devices) they can be discounted by the user. Use as few different sounds as possible. This may be deliberate, where the user consciously discards "yet another alert" for a time, or unconscious as the user's auditory center eventually filters out repeated tones as noise. This can even result in users sleeping through quite loud alarms due to the tone being considered noise by their brain.

Do not go too far the other way, and have no auditory feedback, especially for errors. Delayed response conditions are especially prone to having no audible component. This induces a great risk in users who may believe they have sent a message or set a condition to be alerted later, and may not look at the device for some time.

It is even possible for accidental entry, or deliberate eyes-off entry, to clear a visible error so users are not informed of alerts or delayed responses at all. Be sure to use notification Tones whenever the condition calls for it.

Keep in mind that users may be wearing headsets. Using tones to emulate Haptic Response will not work in this case, and can be very annoying. Consider changing the output when headsets are in use. In addition, some headsets will not accept all output, so if your application relies on tones, make sure there is a way to have the device still make these sounds.

Next: Voice Input

Discuss & Add

Please do not change content above this line, as it's a perfect match with the printed book. Everything else you want to add goes down here.

== Sonifiction == Check this out:

Sonification conveys information by using non-speech sounds. To listen to data as sound and noise can be a surprising new experience with diverse applications ranging from novel interfaces for visually impaired people to data analysis problems in many scientific fields. This book gives a solid introduction to the field of auditory display, the techniques for sonification, suitable technologies for developing sonification algorithms, and the most promising application areas. The book is accompanied by the online repository of sound examples.

Examples

If you want to add examples (and we occasionally do also) add them here.

Make a new section

Just like this. If, for example, you want to argue about the differences between, say, Tidwell's Vertical Stack, and our general concept of the List, then add a section to discuss. If we're successful, we'll get to make a new edition and will take all these discussions into account.